For a project I’ve been working on lately I wanted to experiment with using MIDI files for music and playing them back in real-time. The project is using Unity and FMOD Studio, which has some very basic MIDI support but doesn’t let you do anything to modify the input data at runtime.

I was inspired by this video about dynamic music in Pikmin 2, as well as the soundtrack in the original System Shock which has fancy MIDI-based transitions between action and exploration music. For this stage of the experiment though, I just wanted to get basic playback of the instruments working correctly.

Since FMOD won’t let me poke at the MIDI info if I play it back natively that way, I needed a way to:

- Read the MIDI file myself and get the note info.

- Find the exact time a sound should be played.

- Translate the note value and General MIDI instrument into the sound that should be played.

- Set the sound to the correct pitch.

- Hope that FMOD is performant enough to let me do all this with a substantial amount of data.

Reading a MIDI File

This part was very easy actually – I used WetDryMIDI to handle both the file reading and the playback stages. It’s a very nice library, easy to use and well documented. I can’t speak on its usefulness in a production-scale project (I’d definitely want to measure the amount of heap allocations it generates as a lot of non-Unity specific .NET projects are not careful with managing this) but for this little prototype it made things very easy.

One challenge was ensuring that the MIDI playback object had a stable clock pulse for updating, to ensure that framerate or CPU fluctuations did not cause the timing of note playback to become unstable. Initially I tried using Unity’s FixedUpdate(), and it was mostly fine but could occasionally sound a tiny bit off.

The solution I settled on was actually using FMOD’s own audio thread to tick the MIDI playback. Audio already needs very precise and stable timing to work well, so it was a natural fit. Getting access to a callback for the audio thread was a little tricky though – FMOD is generally well documented, but the Unity integration is missing information on how to do certain things that would be simple in C++.

For instance, to set up a callback for audio update in C++, it is very simple:

RESULT MyCallback(FMOD_SYSTEM *system,

FMOD_SYSTEM_CALLBACK_TYPE type,

void *commanddata1,

void *commanddata2,

void *userdata) {

// Do the MIDI update

}

void SetupFMOD() {

FMOD::System mySystem = ...;

mySystem.setCallback(&MyCallback, FMOD_SYSTEM_CALLBACK_PREUPDATE);

}

But due to C#’s managed nature, it was a little less straightforward to set up a callback. From other FMOD C# examples and cheating using static variables and methods to make memory handling easier, I arrived at this solution:

using System;

using System.Diagnostics;

using System.Runtime.CompilerServices;

using FMOD;

using FMODUnity;

using UnityEngine;

using Debug = UnityEngine.Debug;

[CreateAssetMenu(menuName = "Radish/Demo FMOD Callback Handler")]

public class DemoFmodCallbackHandler : PlatformCallbackHandler

{

public delegate void OnFMODSystemUpdate(TimeSpan deltaTime);

public static event OnFMODSystemUpdate OnSystemUpdate

{

// The locks are to ensure that FMOD doesn't try and invoke the callback at the same time as

// someone might be subscribing to the callback. This might not actually be an issue,

// but I wanted to make it watertight and avoid having do debug race conditions.

add

{

lock (s_Lock)

s_SystemUpdateCallback += value;

}

remove

{

lock (s_Lock)

s_SystemUpdateCallback -= value;

}

}

private static OnFMODSystemUpdate s_SystemUpdateCallback;

private static readonly object s_Lock = new();

private static Stopwatch s_Timer;

public override void PreInitialize(FMOD.Studio.System system, Action<RESULT, string> reportResult)

{

s_Timer = Stopwatch.StartNew();

if (!Try(system.getCoreSystem(out var coreSystem)))

return;

if (!Try(coreSystem.setCallback(OnCoreSystemPreUpdate, SYSTEM_CALLBACK_TYPE.PREUPDATE)))

return;

Debug.Log("Successfully hooked FMOD pre-update", this);

return;

bool Try(RESULT result, [CallerArgumentExpression("result")] string resultText = "")

{

if (result == RESULT.OK)

return true;

reportResult(result, $"Failed: {resultText}");

return false;

}

}

// This attribute tells the C# runtime how to handle the calling convention of FMOD callbacks,

// since FMOD can be fussy about that it seems. I love C++.

[AOT.MonoPInvokeCallback(typeof(SYSTEM_CALLBACK))]

private static RESULT OnCoreSystemPreUpdate(IntPtr system, SYSTEM_CALLBACK_TYPE type, IntPtr commandData1, IntPtr commandData2, IntPtr userdata)

{

s_Timer.Stop();

try

{

lock (s_Lock)

{

s_SystemUpdateCallback?.Invoke(s_Timer.Elapsed);

}

}

catch (Exception ex)

{

Debug.LogException(ex);

}

finally

{

s_Timer.Restart();

}

return RESULT.OK;

}

}

This asset can then be assigned to your FMOD settings and wow, you now have a callback for every audio thead update! It is important to keep in mind that Unity is largely not thread-safe however, so you have to be careful about what APIs you use. In this case, later on I need to sync back to the main thread to actually create FMOD event instances.

I can then use this callback elsewhere to call TickClock() on a WetDryMIDI Playback instance and respond to the playback events with very accurate timing. From a quick benchmark I did, FMOD seems to be internally updating its audio thread around every 20ms (on macOS at least), which is really quite responsive (for reference, in REAPER with my Steinberg UR22C audio interface I can get a round trip latency of 15ms using ASIO drivers, which is good enough for me to play guitar with virtual effects and not even notice a delay).

Note Decoding

Now I can get accurate events when a MIDI event occurs, so the next step is figuring what note to play and the pitch it must be played at. This was mostly a lot of trial and error, since MIDI is actually quite fast and loose with how instruments are assigned to different channels. I expected that information to be provided up-front somewhere in the file, but it is actually also based on the stream of commands like notes are! This means that the assigned instrument for a channel can change mid-song, or not even be assigned until part-way in. This makes sense thinking about the original purpose of MIDI, but makes things annoying for us here in 2024.

My solution is to maintain a Dictionary that maps the channel ID to a ‘program’ ID (essentially the index of which General MIDI instrument to use), and looks up which instrument to use with each note on event. If there is no program assigned, we just don’t play that note. Luckily all the MIDI files I’ve tried so far contain properly formed data so this hasn’t caused any issues.

As mentioned earlier, note playback is somewhere I have to sync back to the main thread because FMOD won’t like playing events from its own audio thread. Perhaps there is a more efficient way to handle this that requires less error-prone and potentially expensive operations, but it was fine for this prototype.

Playback

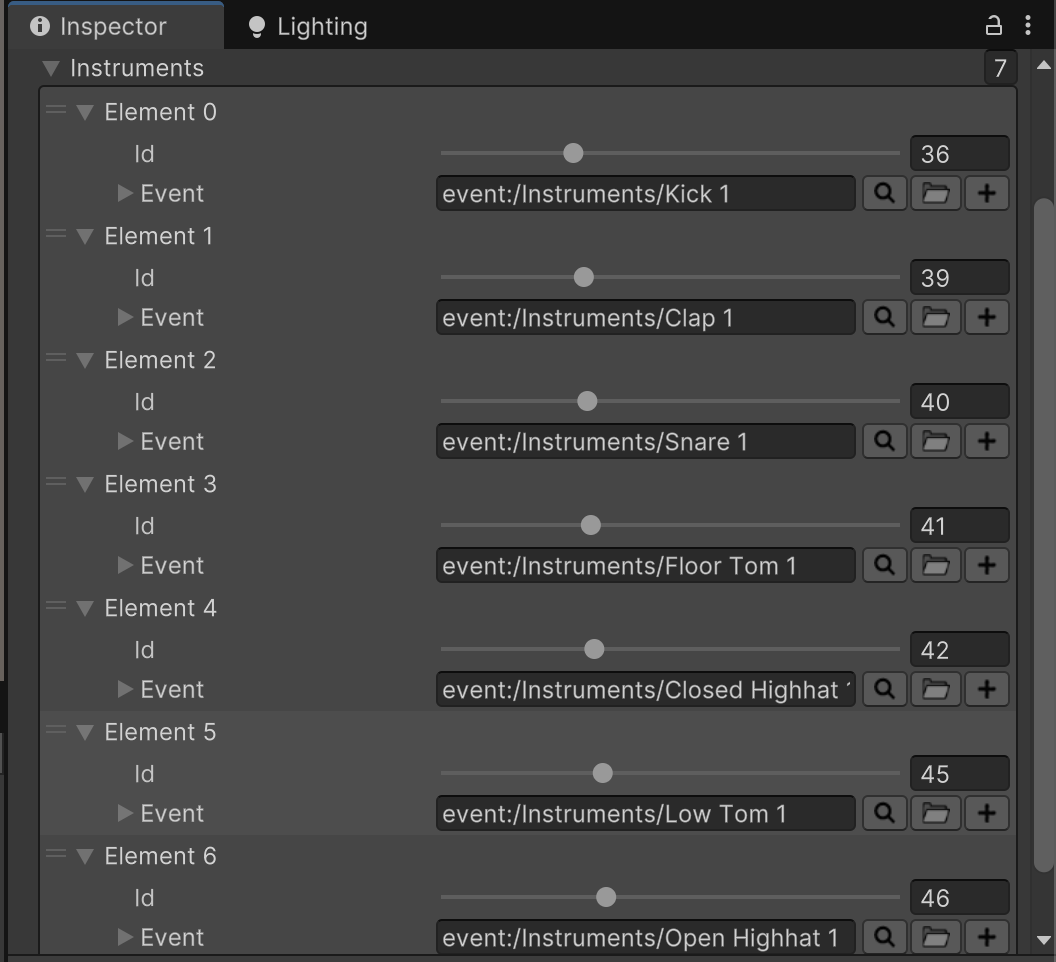

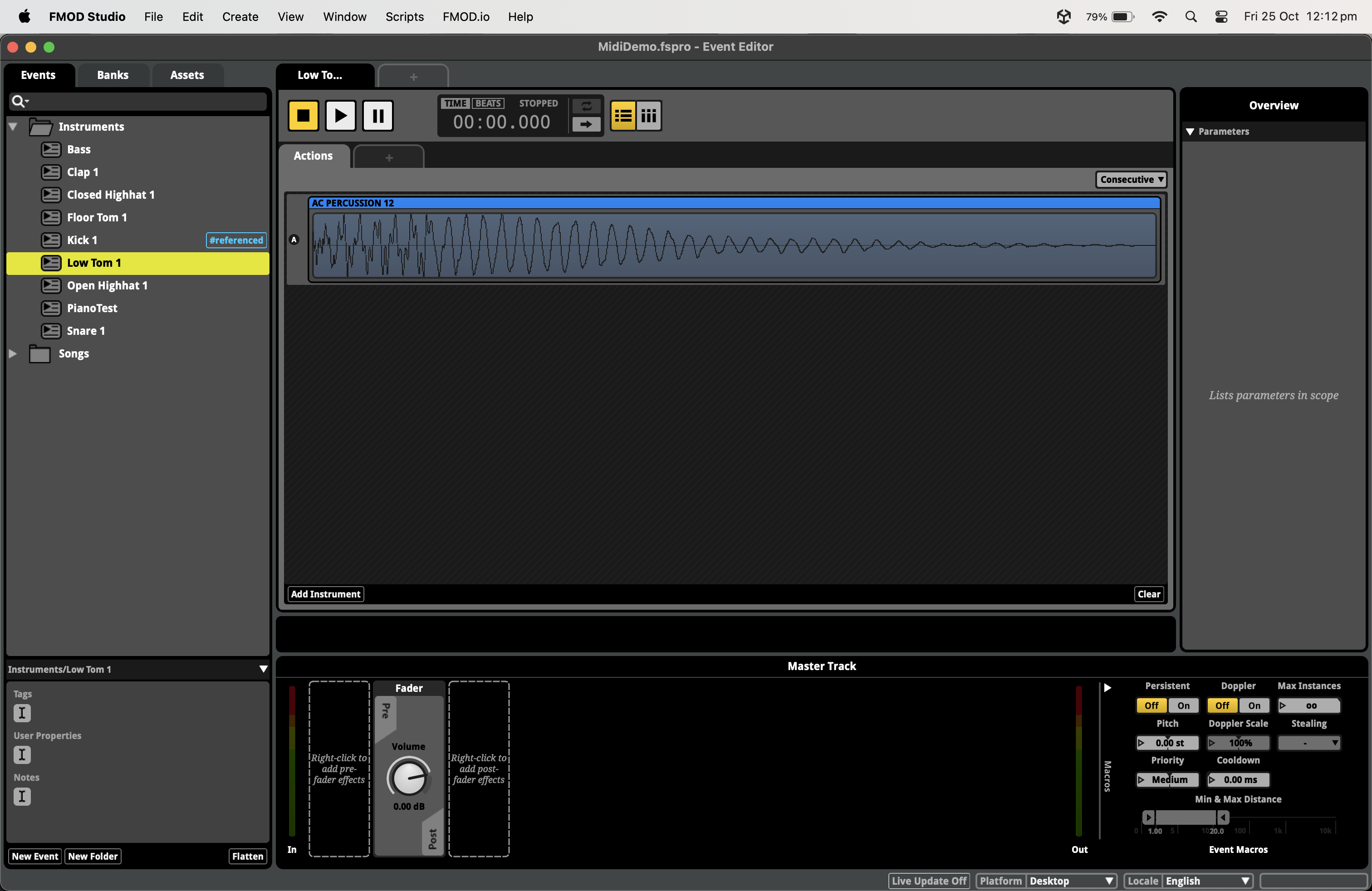

To map program IDs to an actual FMOD event to play, I created a PatchSet asset that simply maps the numbers 1-128 to an EventReference. To play back a file properly, I need two of these, since drums in MIDI are hardcoded to channel 10 and I’m not sending the note number directly into FMOD (and building a parameter-based switch for this in FMOD Studio would be very tedious).

Here I make an assumption that all “base” sounds for pitched instruments are tuned to middle C, which lets me use the note number to calculate an offset in semitones which I pass into the FMOD Event Instance for that note with setPitch().

if (!patchSet.TryGetEventForInstrument(patchId, out var eventId))

return;

var instance = RuntimeManager.CreateInstance(eventId);

if (!noPitchAdjust)

{

// Maths to convert semitone difference to an FMOD pitch offset.

var noteOffsetFromMiddleC = note - 60;

instance.setPitch(Mathf.Clamp(Mathf.Pow(2, noteOffsetFromMiddleC / 12.0f), 0, 100));

}

instance.start();

instance.release();

I’m not keeping instances around yet, which I would need to do to properly handle note length and pitch bends, so everything just plays as a one-shot. I didn’t want to mess around with loop regions in FMOD and perfectly lining up audio samples at 9pm at night, but for full MIDI file compatibility I would need these things.

Also, since pitch modifying in this way has a quite limited range (the FMOD docs seem to say that 2 octaves is about the limit), a robust solution would need multiple samples for each pitch range bracket and some way to switch between them. Definitely a lot more work which I did not attempt.

Room to Improve

As a proof of concept I am quite happy with how this turned out. I managed to get it working in one evening, and with a few more I could probably get the note sustain, pitch bends and note velocity working too.

What would take more work is manipulating the MIDI data itself at runtime. I haven’t even attempted this yet, and it is probably the most complex part of this idea (I’ll need to learn WetDryMIDI inside-out I think). I definitely understand why dynamic music in games went to audio stream loops as soon as storage space and memory was no longer a limiting factor now.

For the larger project this prototype was for, I’m actually less sure using MIDI would be a good choice now, at least for most of the music in it. Setting all this up for different sounds, getting everything to sound just right, etc. seems like it’s probably not worth the effort. And not to discount the fact that General MIDI support (in the sense that games use it) in most DAWs is an afterthought these days, and trying to get instruments to sound the same in a DAW as in FMOD… yeah, perhaps it isn’t worth it.

Despite this, it was an interesting experiment that took me into a little weird bit of the software world I’m not otherwise too familiar with.

The audio folks over at Nintendo were built different for having to deal with this kind of setup for music for so long (games were using sample-based music like this well into the Wii and DS eras). And back in the 90s, you likely didn’t have a choice unless you wanted to use 90% of your CD capacity for streaming music, if you were even lucky enough to release games on CD.

Soon I’d like to put the Unity project for this up on GitHub once I scrub all the legally actionable audio samples and poor quality MIDIs of 20th Century pop music from it. I’ll add the link here once that’s done.